Integrating Deep Learning and Topic Model in Context-Aware Recommendation

Abstract

Traditional Collaborative Filtering (CF) methods for recommender systems suffer from the problem of data sparsity. To alleviate this problem, utilizing the abundant information within user reviews can be an effective method. Recently, several models are proposed to use topic model or deep learning for review understanding in recommendation tasks, but the two approaches both have some drawbacks. In this paper, we try to combine the two approaches to make a deeper understanding of user reviews and boost recommendation results. Firstly, we propose the model LMF, which integrate LSTM network into probabilistic matrix factorization (PMF), and evaluate its effectiveness in experiments. Secondly, we extend the LMF model by adding topic information and construct a new model LTMF. LTMF provides a flexible and effective framework, that integrates the information from topic model and deep learning into recommendation. Extensive experiments on real-world Amazon datasets show that combing the information from topic model and deep learning significantly improves rating prediction accuracy. And our LTMF model outperforms the state-of-art recommendation models even when the data is extremely sparse.

Intuition

Suppose we have following two reviews in recommender systems. If we understand the sentences through topic model, CNN and LSTM respectively, the results are different.

| Example |

|---|

| 1. I prefer apple than banana when choosing fruits. |

| 2. I prefer apple than google when choosing jobs. |

Topic model is a bag-of-words model that considers a sentence as a combination of individual words. It ignores contextual information so can't distinguish the preference of speaker.

CNN uses sliding windows to capture local context and word order in a sentence. But the sizes of sliding windows are often small, which causing CNN model fails to link words in the sentence begin and end. In the examples, CNN can't distinguish the different meanings of the word ''apple'' due to the small size of sliding windows .

LSTM is a special type of RNN which has the ability to retain the whole sentence information. So it won't meet the ambiguity problem in CNN and can retain context information than Topic model. Intuitively, LSTM can perform better on interpreting user reviews in recommendation tasks. Based on this, we integrates LSTM into traditional CF methods and propose the LMF model.

Model Overview

To know more detailed explanation, please refer to our paper.

The left is the parameter relations of LTMF model, where shaded nodes are data (R:rating, D: reviews) and other nodes are parameters. Single connection lines represent there are constraint relationship between the two nodes. Double connections mean the relationship is bidirectional so they can affect each other's results. The right is a detailed architecture of the our LSTM model to utilize item review documents.

LTMF utilizes item vector(V) as the bridge to combine the information of Topic Model and LSTM. Indeed, It is easy to repalce LSTM part with other deep learning network and we will show this in experiments. In conclusion, LTMF provides an effective framework to integrate topic model with deep learning networks for recommendation.Experiments

Datasets

| Dataset | # users | # items | # ratings | word | av. words per item | density |

|---|---|---|---|---|---|---|

| Amazon Instant Video (AIV) | 29753 | 15147 | 135167 | 1313087 | 86.69 | 0.0030% |

| Apps for Android (AFA) | 240931 | 51599 | 1322839 | 3395107 | 65.80 | 0.0106% |

| Baby (BB) | 71812 | 42515 | 379969 | 3478861 | 81.83 | 0.0124% |

| Musical Instruments (MI) | 29005 | 48751 | 150526 | 4214058 | 86.44 | 0.0106% |

| Office Product (OP) | 59844 | 60628 | 286521 | 4996252 | 82.41 | 0.0079% |

| Pet Supplies (PS) | 93270 | 70063 | 477859 | 5525419 | 78.86 | 0.0073% |

| Grocery and Gourmet Food (GGF) | 86389 | 108448 | 508676 | 8347120 | 76.97 | 0.0054% |

| Video Games (VG) | 84257 | 39422 | 476546 | 3648315 | 92.55 | 0.0143% |

| Patio Lawn and Garden (PLG) | 54167 | 57826 | 242944 | 4754605 | 82.22 | 0.0078% |

| Digital Music (DM) | 56812 | 156496 | 351769 | 6070200 | 38.79 | 0.0040% |

Competitors

| Model | Description |

|---|---|

| Probabilistic Matrix Factorization (PMF) [Salakhutdinov et al.] |

A standard matrix factorization model for recommender systems which only uses rating information. |

| Hidden Factors as Topics (HFT) [McAuley et al.] |

A state-of-art Topic Model based recommendation model that combines reviews information with ratings information. It utilizes LDA(a kind of Topic Model) to capture unstructured textual information in reviews. |

| Convolutional Matrix Factorization (ConvMF) [Kim et al.] |

A state-of-art CNN based recommendation model. It utilizes CNN to capture contextual information of item reviews. |

| LSTM matrix factorization (LMF) | Our proposed LSTM based recommendation model that utilizes LSTM network to capture contextual information of item reviews. |

| LSTM Topic matrix factorization (LTMF) | Our proposed recommendation framework that intergrates LSTM with Topic model for review understanding in recommenders. It's easy to replace LSTM with other deep learning networks. |

| Convolutional Topic matrix factorization (CTMF) | A comparison model which is modified from LTMF by replacing the LSTM network with CNN. We use it to explore the effectiveness of combining deep learning and topic model in review understanding and recommendation. |

Results

If you want to know more detailed explanation, please refer to our paper

1. MSE results of rating prediction.

| Dataset | (a) | (b) | (c) | (d) | (e) | (f) |

|---|---|---|---|---|---|---|

| PMF | HFT | ConvMF | CTMF | LMF | LTMF | |

| AIV | 1.436 (0.02) | 1.368 (0.02) | 1.388 (0.02) | 1.350 (0.03) | 1.321 (0.02) | 1.309 (0.02) |

| AFA | 1.673 (0.01) | 1.649 (0.01) | 1.651 (0.01) | 1.648 (0.01) | 1.635 (0.01) | 1.629 (0.01) |

| BB | 1.643 (0.01) | 1.577 (0.01) | 1.556 (0.01) | 1.531 (0.01) | 1.513 (0.01) | 1.499 (0.01) |

| BB | 1.643 (0.01) | 1.577 (0.01) | 1.556 (0.01) | 1.531 (0.01) | 1.513 (0.01) | 1.499 (0.01) |

| MI | 1.555 (0.03) | 1.423 (0.02) | 1.399 (0.02) | 1.367 (0.02) | 1.317 (0.02) | 1.302 (0.02) |

| OP | 1.622 (0.02) | 1.547 (0.02) | 1.501 (0.02) | 1.466 (0.02) | 1.432 (0.02) | 1.420 (0.02) |

| PS | 1.796 (0.01) | 1.736 (0.01) | 1.698 (0.02) | 1.680 (0.02) | 1.646 (0.02) | 1.626 (0.01) |

| GGF | 1.585 (0.01) | 1.539 (0.01) | 1.478 (0.01) | 1.446 (0.02) | 1.393 (0.01) | 1.386 (0.01) |

| VG | 1.510 (0.01) | 1.468 (0.01) | 1.463 (0.01) | 1.446 (0.02) | 1.393 (0.01) | 1.386 (0.01) |

| PLG | 1.854 (0.02) | 1.779 (0.02) | 1.710 (0.02) | 1.678 (0.02) | 1.628 (0.02) | 1.621 (0.02) |

| DM | 1.197 (0.01) | 1.171 (0.01) | 1.032 (0.01) | 0.990 (0.01) | 0.968 (0.01) | 0.965 (0.01) |

Above table shows the MSE result of rating prediction on various models. The best results are highlighted in blue bold. The standard deviations of MSE results are shown in parenthesis. Compared with these models, our LMF and LTMF achieve significant improvements, and LTMF model achieve the best result on all the datasets.

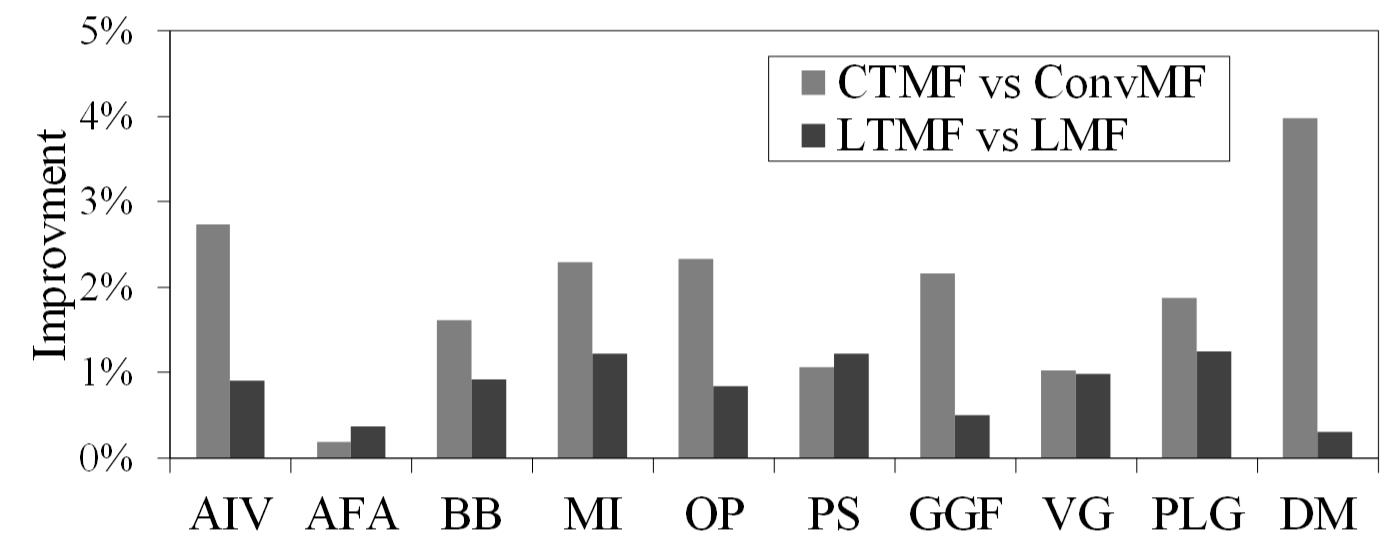

2. Histrogram of Improvements.

Figure 2 shows the MSE improvements of HFT, ConvMF and LMF compared with PMF on different spaseness datasets. The three models all utilze rating and review information. Among these models, our LMF model consistently achieves the better performance on all datasets, which also indicates LSTM gains better understanding of reviews.

Figure 3 compares the performance of two original deep learning models (LMF and ConvMF) with the extended models (LTMF and CTMF) to explore the effectiveness of combining deep learning with topic model. Both extended models outperform the original models, where LTMF consistently achieves the best results on all datasets. The improvements confirm our conjecture that recommendation results can be improved by combining structural information (from deep learning) and unstructured (from Topic model) information .

3. "Cold-start" Recommendation.

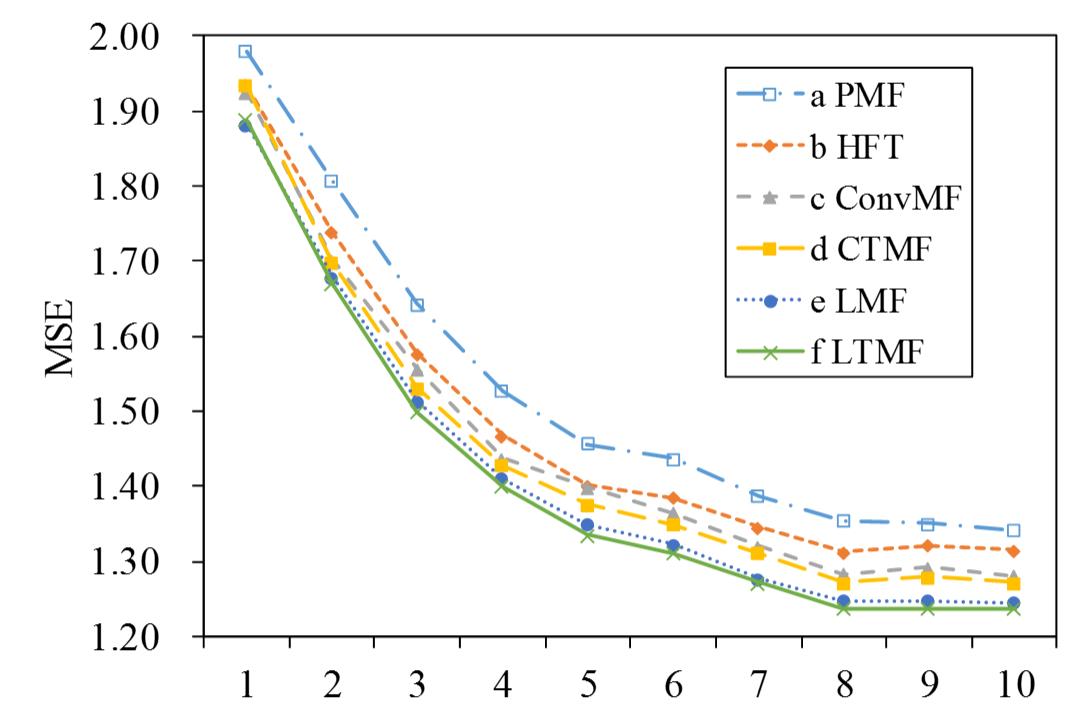

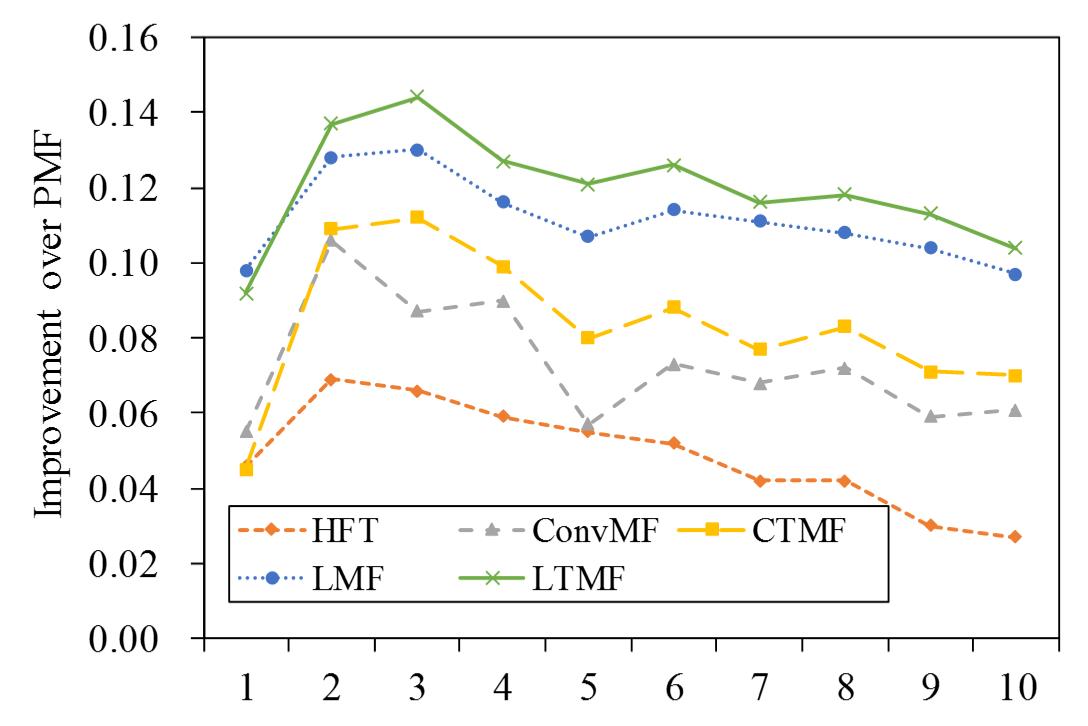

Figure 4. Results for ``Cold-start'' recommendation with limited number of user ratings and reviews. Left: the MSE values of all models. Right: the gains in MSE compared with PMF.

Figure 5 shows the results of these models on making ''Cold-start'' recommendation.We choose a dataset ``Baby'' and refilter it so that every user has at least N ratings (N varies from 1 to 10). We can observe that all models gain better recommendation accuracy with the increment of N, which means user and item latent features can be better extracted with more useful information. Besides, when N is relative small, models utilizing both review and rating information achieve better improvements over PMF. It suggests that review information can be an effective supplementary when rating data is scarce. Among these models, our LTMF model significantly outperforms the others and exhibits better ability to make ``Cold-start'' recommendation.

4. Qualitative Analysis.

The topic words of dataset "Office Product" produced by HFT .

| topic1 | topic2 | topic3 | topic4 | topic5 |

|---|---|---|---|---|

| envelope | markers | pins | wallet | planner |

| erasers | compatible | scale | notebooks | keyboard |

| needs | lead | huge | window | tab |

| numbers | credit | notebook | remove | |

| letters | nice | document | cardboard | stickers |

| christmas | camera | attach | plug | clips |

| bleed | copy | address | durable | chairs |

| fall | party | push | stapler | red |

Topic words of dataset "Office Product " discovered by LTMF.

| topic1 | topic2 | topic3 | topic4 | topic5 |

|---|---|---|---|---|

| bands | bags | scale | wallet | folder |

| drum | camera | document | clock | folders |

| remote | cabinet | magnets | coins | binder |

| chalk | compatible | monitors | notebooks | stickers |

| presentation | tray | pins | shredder | remove |

| buttons | party | fax | bookmark | head |

| exchange | markers | cord | notebook | tab |

| numbers | dividers | usb | plug | files |

To evaluate whether combing LSTM and topic model is able to make a better understanding of user reviews, we compare the topic words discovered by HFT and LTMF. As we can see, HFT produces some adjectives and verbs which have little help for topic clustering (e.g. ``nice'', ``huge'', ``attach''. Showed in black bold), but they don't exist in the top word list of LTMF, because extra information from LSTM makes a timely supplement for Topic model. Similar situations also occur on words ``document'' and ``compatible'' (showed in blue bold). The word ``document'' is an apparent topic word, so LTMF gives it a larger weight in topic word list. The word ``compatible'', an adjectives, can provide less topic information than nouns, so LTMF decreases its weight and put ``camera'' in the second place.With this comparison, we can observe LTMF gains a clearer topic clustering result than HFT, which also implies that combing LSTM and topic model can achieve better understanding of user reviews.